Browser Permissions

This will be the last post (for now) on the browser-side before we move into a discussion of the four specific APIs that make up advertising-focused elements of the Google Privacy Sandbox: Topics, Protected Audiences, Attribution Reporting, and Private Aggregation. The topic is browser permissions. Permissions come in two indirectly-related core specifications: the Permissions API specification and the Permissions Policy specification.

- The Permissions API Specification defines the mechanism by which users and/or the user agent can set permissions for which features in their browser web applications can access.

- The Permission Policy Specification is a server-side mechanism. It allows web site owners to set access rules for which browser features and functionalities (e.g. geolocation) third- parties embedded on a page (e.g., an iframe with an ad from www.thirdparty.com) can have access to. Permission Policy was previously known as the Feature Policy Specification. On the whole, that history is not relevant to this discussion. However there is one case, the Feature Policy JavaScript API, which is relevant since it has not been updated.

Most browser features are delivered through some type of API. Historically different APIs were inconsistent in handling their own permissions, For example, the Notifications API provided its own methods for requesting permissions and checking permission status, whereas the Geolocation API did not. The Permissions API evolved so that developers could have a consistent user experience for working with permissions.

There is also the fact that publishers and end users may have different requirements. While an end user or a user agent may allow access to geolocation information because they want a more customized experience, a publisher’s terms and conditions may say they will not access geolocation information for any reason. The publisher wants a way to ensure a mistake cannot happen and they somehow get geolocation information from that specific browser. How then does the browser rationalize these two important but conflicting priorities?

The Permissions API effectively aggregates all security restrictions for the context, including any requirement for an API to be used in a secured manner, Permissions Policy Specification restrictions from the publisher applied to the document, requirements for user interaction, and user prompts. So, for example, if an API is restricted by a server-side permissions policy, the returned permission would be denied and the user (client-side) would not be prompted for access. The two APIs are thus two sides of a single coin and work together to allow both users and top-level domain owners to set the permissions they wish in a non-conflicting manner.

Permissions API Specification

Web browser owners are continuously enhancing the functionalities of their browsers to provide better experiences, or a wider range of experiences, to their users. Users may not want to allow web sites they visit to have access to one or more of these features. The Permissions API Specification solves this problem. It defines the concept of a powerful feature. A powerful feature is a browser-side feature for which a user gives express permission for web sites they visit to use/access. Powerful features, except for a few notable exceptions, are also policy-controlled features which are also specified by website owners under the Permissions Policy Specification.

A permission for a powerful feature can have one of three states:

- Denied. The user, or their user agent on their behalf, has denied access to the feature. The caller cannot use it. Features which are denied by default include geolocation capabilities, camera access, or microphone access. Access to many of these “denied” features can be changed through prompts to the user (see Figure 1).

- Granted. The user, or the user agent on the user's behalf, has given express permission to use the feature. The caller can use the feature without having the user agent asking the user's permission. Examples of features granted by default are storage access where websites can store data locally, or script execution, which allows websites to execute JavaScript code.

- Prompt(ed). The user must provide express permission. The user agent will prompt the user for the express permission when a specific top-level domain asks to use it.

Figure 1 - Examples of Prompted Features

To be clear, even those features that are denied by default may actually have their default permissions state as “prompt”. This setting allows for a user to be prompted to provide express permission for that feature to be used. You can see this by clicking settings widget on the left-hand side of the browser address bar and clicking into site settings. This displays the current status of permissions for each powerful feature on that specific web site (Figure 2). This interface allows the user to manually set their preferences.

Figure 2 - Examples of Permission Settings in Chrome for a W3c.org Site

Developers can also use Chrome developer tools to examine permissions for any given frame on a specific page to ensure that permissions are handled the way the developer intends (Figure 3)

Figure 3 - Permissions as Shown in Chrome Developer Tools (under the applications tab)

Every permission has a lifetime, which is the duration for which a particular permission remains "granted" before it reverts back to its default state. A lifetime could be for a specific amount of time, until a particular top-level browsing context is destroyed, or it could be infinite. The exact lifetime is set when the user gives expression permission to use the feature. It can often be set by the user via a browser interface. Alternatively, it can also be hard-wired into the browser itself by the browser manufacturer.

All permissions are stored locally on the device in a permission store. For WIndows 11 this file is “Local State” and it can be found in the Chrome subdirectories:

C:\Users\<user_name>\AppData\Local\Google\Chrome\User Data

Each permission store entry is a key-value tuple consisting of permission descriptor, permission key, and state

Permissions Policies

Permissions Policies allows web developers to selectively block or delegate access to certain browser features when a user agent is viewing a page from their domain. We have already been exposed to Permissions Policies, in particular the Permissions Policy header, in the prior post on Client Hints Infrastructure. Client Hints, however, are only one aspect of browser behavior or features that can be controlled by permissions policies. The full standard list is shown in the resources section here.

This list, however, is always growing, as more specifications for more features are developed and W3C working groups design their specifications/technical approaches to meet the design goals for security and privacy as discussed in RFC 6973 - Privacy Considerations for Internet Protocols, which we discussed previously. The Google Privacy Sandbox is an excellent example of this, which is explored in a later section.

Permissions policies are implemented at two layers:

- The response header layer (as we saw for Client Hints)

- The embedded frame layer - mostly around iFrames and, in terms of the Sandbox technologies, Fenced Frames.

The header layer sets global policies for the specific user agent. The embedded frame layer is the more fine-grained. It inherits the settings from the response header layer, and its settings for the specific origin it (the iframe) controls supersede the permissions it inherits .

We’ll start by discussing the two mechanics for permission policies and then show how they interact. After that we will discuss the alternate Feature Policy Javascript implementation.

Permissions Syntax

Before we delve into the mechanics of permissions policies, there are some nomenclature definitions we need to understand. These are shown in Figure 4. You can come back to this reference as we discuss the mechanics until you are comfortable with the way policies are specified under Permissions Policies.

Figure 4 - Syntactic Elements of the Permissions Policy Specification

Response Header Syntax

The response header permission settings are the global default across any and all features and frames on a given page. They are the primary set of permissions used when there are no more specific policies put in place at the frame level.

The default structure of header permissions is relatively simple:

Permissions-Policy: <directive>=<allowlist>The directive is the feature which needs permissioning and the allowlist is the set of domains and subdomains to which permissions will be given. Let’s take some examples to show the range of options. It is not my intention to go exhaustively through the grammar and how it works. The main point is to show you generally how you set permissions in different ways.

Let’s use the top level domain of https://www.example .com. To block all access to the geolocation directive (feature) use the following:

Permissions-Policy: geolocation=()To allow access to a subset of origins, use the following:

Permissions-Policy: geolocation=(self "https://a.example.com" "https://b.example.com")In this example, we are allowing geolocation feature access to the top level domain (“self”, or https://www.example.com), and two of its subdomains, a.example.com and b.example.com. Note that the two full URLs are input in quotes with only spaces between.and the allowlist is enclosed in parentheses.

Permissions can be concatenated on a single line or broken out separately. The two examples below are equivalent:

Permissions-Policy: picture-in-picture=(), geolocation=(self "https://example.com"), camera=*;Is the same as:

Permissions-Policy: picture-in-picture=()

Permissions-Policy: geolocation=(self "https://example.com")

Permissions-Policy: camera=*The list of powerful features that can be controlled both by the header form and the embedded syntax form are shown here.

What happens if there is no Permissions Policy header on the page? In that case, every feature policy defaults to * - that is, all origins and subdomains have access to the feature.

Embedded Frame Syntax

Let’s say a publisher’s page, https://www.exaomple.com/home contains both a third-party iFrame embedded in a page for a payment widget as well as an iframe that contains an ad. The two iFrames are from different vendors, and the publisher wants to differentially give access to these two vendors to different powerful features. Only the payment widget should have access to the user’s identity credentials but the advertiser should not. At the same time, only the advertiser should have access to the geolocation feature as a way to know which ad to serve, but definitely for security reasons should never have access to the user’s identity credentials. How do they accomplish that?

This is where the embedded frame layer comes in. The embedded frame layer allows for more fine-grained and differential control of permission delegation than the header layer can provide. It allows the developer to set permissions at the frame level that may supersede those from the header layer.

The basic syntax of the embedded frame approach is as follows:

<iframe src="<origin>" allow="<directive> <allowlist>"></iframe>The src is the top level domain (or origin).. The allow="<directive> <allowlist>" sets the permission for the specific feature and identifies which third-party domains or origin subdomains have access.

One very important note to this approach is that once a permission is passed to a third-party, that third-party can pass the same permission on to other third-parties it does business with. The assumption is that if the third-party is trusted, then can be relied on to only share these permissions with parties that are also trusted.

So now let’s show how this would be implemented in our example.

Here is the header permission setting for the top-level domain on this page

Permissions-Policy: identity-credentials-get=(self)

Permissions-Policy: geolocation=(self)

Permissions-Policy: camera=*Now let’s show the embedded frame settings for the payments provider iFrame:

<iframe src="https://www.example.com" allow="identity-credentials 'self' https://www.payment_provider.com"></iframe>And here are the embedded frame settings for the ad provider’s iFrame:

<iframe src="https://www.example.com" allow="geolocation 'self' https://www.ad_provider.com"></iframe>

Figure 5 shows how these sets of permissions interact to give the correct accesses to the origin as well as the two third-parties.

Figure 5 - Resulting Permissions Policies for https://www.example.com/home

Again, the point here is not to drill deeply into the various combinations of syntactic patterns for either of these layers. The main concept to take away from this discussion is how the header layer and the embedded layer interact to provide fine-grained control of policies for the various parties that operate on any given webpage.

Alternate Feature Policy API Javascript-Based Mechanic

We previously mentioned that Privacy Policy evolved from another standard called Feature Policy. Feature Policy was subject to some generic design weaknesses of HTTP headers that were resolved as part of a more general update to header structures called Structured Fields. However, there was a Javascript-based approach to permissions under Feature Policy that has yet to be updated. So the alternate Javascript mechanic, the Feature Policy API, is the way permissions are handled using Javascript for Permissions Policy for now. A proposal to update the API into Permissions Policy exists, but not much has happened with it since early 2022. So it is not clear when or if these updates will be made.

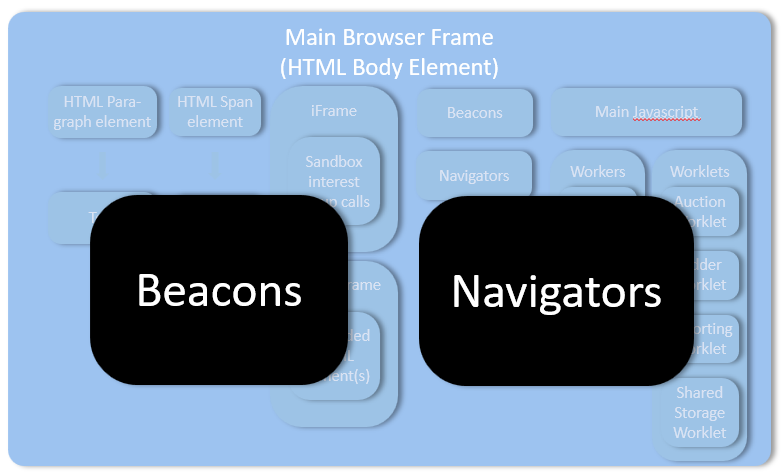

The API consists of four endpoints that allow developers to to set or examine the allow condition for powerful features across either a document or an iFrame, depending on context, although the most common use is using the API to set permissions within the context of an iFrame. Figure 6 lists these four endpoints and describes what they do.

Figure 6 - Feature Policy API Endpoints

There are subtle differences between the implementation of these features at the document and iFrame level. For example, if the featurePolicy.allowsFeature(feature, origin) is called at the document level, the method tells you that it's possible for the feature to be allowed to that origin. The developer would still need to conduct an additional check for the allow attribute on the iframe element to determine if the feature is allowed for that element’s third-party origin. Those who wish to drill further into the API syntax and usage can see this MDN article on FeaturePolicy.

Permissions Policy and the Google Privacy Sandbox

Since this blog is all about the Privacy Sandbox, I would be remiss if I didn’t discuss exactly how Permissions Policy applies to the APIs specific to the Sandbox. There are two aspects to Permissions Policy to discuss in regards to the Privacy Sandbox:

- Permissions directives/features which apply to the various Privacy Sandbox APIs

- Embedded layer permissions in fenced frames

Permissions Features for Privacy Sandbox APIs

Figure 7 shows the features around Privacy Sandbox which can be controlled via the Permissions Policy Specification.

Figure 7 - Privacy Sandbox APIs Subject to Permissions Policy

Permissions Policy in Fenced Frames

Fenced Frames were discussed in a post at the beginning of Chapter 2. Fenced frames are an evolution of iFrames that provide more native privacy features and address other shortcomings of iFrames. The core design goal of fenced frames is to ensure that a user’s identity/information from the advertiser cannot be correlated or mixed with user information from the publisher’s site when an ad is served. Fenced frames have numerous restrictions relative to iFrames to ensure that such cross-site information sharing cannot occur.

These limitations, however, create a challenge for permissions policy. A set of permissions delegated from permissions headers to a fenced frame could potentially allow access to features that could be used as a communication channel between origins, thus opening the way for cross-site information sharing.

As a result, standard web features whose access is typically controlled via Permissions Policy (for example, camera or geolocation) are not available within fenced frames. The only features that can be enabled by a policy inside fenced frames are the specific features designed to be used inside fenced frames:

Protected Audience API

- attribution-reporting

- private-aggregation

- shared-storage

- Shared-storage-select-url

Shared Storage API

- attribution-reporting

- private-aggregation

- shared-storage

- shared-storage-select-url

Currently these permissions are always enabled inside fenced frames. In the future, which ones are enabled will be controllable using the <fencedframe> allow directive. Blocking privacy sandbox features in this manner will also block the fenced frame from loading — there will be no communication channel at all.

So we come to the end of Chapter 2 for now. I may decide to expand it later to include discussions of CORS, CORB and other security standards. But we’ll leave it here for now and begin the move into the server side elements of the Privacy Sandbox.

Client Hints Infrastructure

Introduction

In the last post I introduced the basics of browser and device fingerprinting and noted just how much information is available to any website or third-party tag embedded in a served page. The intention was to allow websites to optimize the user experience for the specific combination of device, operating system, browser, screen size, and more on a given viewer’s device. However, the amount of information available as a result of this open information sharing allowed for the identification of a specific individual user/user agent a majority of the time. This allowed for an alternative and very powerful form of cross-site tracking independent of cookies and other techniques.

The amount of information can be measured in terms of the concept of entropy that evolved from information theory, which you can think of as a meta-descriptor that tells you how many bits of information is needed and/or available to provide a unique identification. In this case, Eckersley in his seminal paper on fingerprinting estimated that the user agent alone contains 10 bits of information, or 210 (1,024 bits). That means that only 1 in 1,024 random browsers visiting a site are expected to share the user agent header. Add a few other features like screen resolution, timezone, and browser plugins (among others) and that number goes to 18.1 bits of information. That means only 1 in 286,777 other browsers will share its fingerprint.

That number may not seem large, but 286,777 unique visitors/day equates to several million unique visitors per month (for example if an average user visits twice a month that would equate to 4mm unique visitors). That means in one month on average 15 browsers would have the same fingerprint. Let’s take CNN, with 767.4 million viewers per month. If all those were unique viewers (which they aren’t), then that would mean on average only 2,675 browsers visiting the site would share a fingerprint with at least one other browser. That is few enough that fingerprinting becomes extremely useful in identifying individuals for marketing purposes, exactly what the Privacy Sandbox and other privacy-first technologies are trying to prevent.

In this post, we are first going to delve more deeply into the industry’s early response to limit the amount of information available for fingerprinting. Then we will explore Google’s specific responses: Client Hints Infrastructure and User Agent Client Hints. Even today, Safari or Firefox do not support Google's approach.. Edge does, as it is built on Chromium.

Early Responses to Browser Fingerprinting

It took almost 10 years for the OS and browser owners to deal with fingerprinting information in the user agent header. Mozilla was first mover in January, 2020 in Firefox 72. They then made similar changes to Mozilla on Android in Firefox 79 in July, 2020. Apple followed suit in September 2020 on both MacOs and iOS 14. The main changes fell into three categories:

- Freezing at the major browser version. Browser version no longer showed the minor version. So instead of 79.0.1 the user agent string was limited to 79.

- Hiding device-specific details. The UA string no longer provided detailed information about the specific Android version or device model.

- Hiding the minor OS version. Instead of providing the exact operating system version, the UA string in Safari 14 and later began reporting only broad version numbers or generalized information. Similarly, Firefox fixed the operating system version and did not report minor versions.

There are subtle differences between how Apple and Mozilla implemented these limitations. Those deltas are shown in Figure 1.

Figure 1 - Differences Between Mozilla’s and Apple’s Restrictions on the User Agent Header

Taking the example from the prior post here is the comparison of the before and after:

Before: Mozilla/5.0 (Linux; Android <span style={{color: '#016F01' }}>13; Pixel 7</span>) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/95.0.0.0 Mobile Safari/537.36After: Mozilla/5.0 (Linux; Android <span style={{color: '#016F01' }}>10; K</span>) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/95.0.0.0 Mobile Safari/537.36

The general structure of the user agent hasn’t changed. This avoided forcing the industry to rewrite their code to parse a user agent string (which would have been exceedingly painful). Instead the changes were subtle, as shown in Figure 2.

Figure 2: Diagram Showing Where Changes Fall in the Reduced User Agent String

The blue values in Figure 2 show those elements which will continue to be updated on all platforms (including Chrome). The green values indicate those elements which are to be unchanging on all platforms, or which in the case of Chrome will be controlled by User Agent Client Hints.

Google, obviously, chose not to implement these approaches and instead took a different tack. Beyond the privacy issues, Google also wanted to deal with the complexity of web servers had reading user agent headers in a passive mode. Many times, the server cannot reliably identify all static variables in the user agent header or infer dynamic user agent preferences. Additionally, publishers and their ad tech intermediaries have to query databases of user agent strings such as DeviceAtlas in real time to identify the device/OS/browser combination they are serving to. These database services are expensive, as is writing/maintaining the code. Google wanted to create a commonly-shared API interface that used standard metadata definitions of user agent elements to support active, standardized negotiations between browser and server to determine which elements could be shared. This would enhance privacy and lower costs even as it allowed sharing of high-entropy elements of the user agent header for optimal user experience.

Overview of the Google Client Hints Infrastructure

Google’s approach is titled Client Hints Infrastructure, with a specific user agent aspect called User Agent Client Hints. Client Hints Infrastructure provides the desired active interface while protecting privacy by requiring the high entropy descriptors to be shared only with appropriate permissions/opt-ins from the user agent. It also, by default, limits sharing of any high-entropy settings with third-parties on the page unless the top-level domain uses browser permissions (more in the next post) to specifically delegate that access to specific third-parties.

There are two ways to implement client hints infrastructure:

- An HTTP Header-Based Approach: Using HTTP request headers, which is available only for first-party contexts. There are two versions of this: request headers themselves and then through a metatag-based approach.

- A JavaScript API-based Approach: Using a JavaScript API, which can be used by an embedded script.

I will start with the header-based approach to introduce the basic concepts and then show how they translate into the Javascript API-based approach.

Universal Low Entropy Elements That Do Not Require Client Hints

Before I delve into Client Hints Infrastructure, it is important to understand the difference in how low-entropy and high-entropy client hints are handled. Low-entropy user agent elements don’t require Client Hints Infrastructure. They are sent by default to the server with all requests (see Figure 3 further down in this post). Those user agent elements are:

- Browser brand

- The browser’s significant major version

- Platform (operating system, e.g. Android, Windows, iOS, Linux)

- Indicator whether or not the client is a mobile device

These simple features do not provide enough information to be able to fingerprint a device.

The Client Hints Infrastructure Header Elements

There are only four header elements unique to the Client Hints Infrastructure API needed to implement its capabilities for accessing high-entropy hints. A fifth, the Permissions Policy header, is more broadly used and not unique to Client Hints.

- Accept-CH Header. When a server wants to access high-entropy client hints, it makes a call to the browser using the Accept-CH response header. It is a response header because the request header comes initially from the user agent calling the specific web page. The server then responds with the Accept-CH response header asking for information it needs (usually for optimizing the user experience). We’ll get into the actual mechanics shortly, but here is an example of what a simple Accept-CH header looks like:

Accept-CH: Viewport-Width, Width, Device-Memory

That is for non-user agent elements. The User Agent Client Hints specification specifically uses a slightly different nomenclature for any item that is part of the user agent, starting all requests with a Sec-CH-UA prefix:

Accept-CH: Sec-CH-UA-Model, Sec-CH-UA-Platform-Version, Sec-CH-UA-Arch

- Sec-CH-x or Sec-CH-UA-x Header. The Sec-CH-<x> and Sec-CH-UA-<x> headers are the structures by which specific high-entropy values are requested and returned from the user agent. The difference is that the former is for general client hints while the latter is for user-agent client hints specifically. The <x> is filled in with the specific property that is required. Here is an example for an item that is not part of the user agent header:

Accept-CH: Viewport-Width, Width

In a subsequent request, the client might include the following headers:

GET /image.jpg HTTP/1.1

Host: example.com

Sec-CH-Viewport-Width: 800

Sec-CH-Width: 600

Note that even though the server requested the features without the Sec-CH- prefix, the user agent returns the values using the Sec-CH-<x> header structure.

You can find the list of available client hints under the Resources section of theprivacysandbox.com here.

- Critical-CH Header. In general, the Accept-CH header only receives the allowed high-entropy hints back from the user agent on the second or any subsequent requests. If it is critical that every load, including the first, has the requested Client Hints, then the server can set a Critical-CH header to request those hints at all times. Here is an example of how the Critical-CH header is used:

HTTP/1.1 200 OK

Content-Type: text/html

Accept-CH: Device-Memory, DPR, Viewport-Width

Critical-CH: Device-Memory

- Permissions-Policy Header. As mentioned previously, client hints are only available to the top-level domain making the Accept-CH response. However, in many cases the publisher may want to share these settings with third-party vendors with JavaScript tags on the page that need access to these same settings, such as a iFrame that displays an ad and needs to know the screen resolution to correctly display the graphics. Here is an example of how the permissions policy header is used:

HTTP/2 200 OK

Content-Type: text/html

Accept-CH: Viewport-Width, Width

Permissions-Policy: ch-viewport-width=(self "https://cdn.example.com"), ch-width=(self "https://cdn.example.com")

Note that the permission is specific to one third-party site and each element has to be called out specifically. That way the top-level domain can share only those elements needed by the third-party and nothing more.

- Meta Tag Variant. Also mentioned previously is that there is a variant of the client hints infrastructure that allows developers to use a metatag to request specific client hints. That request has a form like that shown below:

<meta http-equiv="Accept-CH" content="Viewport-Width, Width" />

- Delegate-CH Header. The Delegate-CH header is used in the meta tag variant in place of the Permissions-Policy header. It appears as follows:

<meta http-equiv="Delegate-CH" content="sec-ch-ua-model; sec-ch-ua-platform; sec-ch-ua-platform-version">

Javascript-Based Approach

As mentioned earlier, only the top-level domain can use the header-based mechanic. Third-parties who have JavaScript tags embedded in a page must use the JavaScript-based version of Client Hints Infrastructure to request these values (the top-level domain can also use the JavaScript API variant). The Javascript-based mechanic uses a JavaScript navigator call - navigator.userAgentData - to access client hints. The default low-entropy elements can be accessed via the two properties: brand, mobile, and platform properties.

// Log the brand data

console.log(navigator.userAgentData.brands);

// output

[

{

brand: 'Chromium',

version: '93',

},

{

brand: 'Google Chrome',

version: '93',

},

{

brand: ' Not;A Brand',

version: '99',

},

];

// Log the mobile indicator

console.log(navigator.userAgentData.mobile);

// output

false;

// Log the platform value

console.log(navigator.userAgentData.platform);

// output

"macOS";

As always, don’t worry about what the code means. Just note the use of the navigator as well as the brand, mobile, and platform properties.

High entropy values are accessed through a getHighEntropyValues() call.

// Log the full user-agent data

navigator

.userAgentData.getHighEntropyValues(

["architecture", "model", "bitness", "platformVersion", "fullVersionList"])

.then(ua => { console.log(ua) });

Again, ignore the code per se. Just note the getHighEntropyValues() call and the way it calls five types of information. There are no Sec-UA-CH- or Sec-CH- elements. The desired information is called using the base names of the features.

The Critical-CH header is not relevant in this approach as there is no two-step round-trip as in the header-based version. However, if I am the top-level domain, it is not clear to me how the Permissions-Policy header is implemented in the JavaScript version. My guess is it isn’t and that permissions always have to be set using browser Permissions by the top-level domain in HTTP headers.

Accept-CH Cache and Accept-CH Frame

Before we delve into the mechanics and flows of Client Hints Infrastructure, there are two more critical concepts we need to introduce

The Accept-CH Cache is the location on the user’s hard drive where the permissions for what is allowed to be shared are stored. It is somewhat like an alternative cookie store in that sites can use each of the hints as a bit set on the client that will be communicated with every request. The cache allows for updates to what high-entropy hints can be shared. But because it is also like a cookie store, under the specification it is subject to similar policies as cookies. A user agent is required to clean out the Accept-CH cache whenever the user clears their cookies or the session cookies expire. There is also another header we have not covered, called the Clear-Site-Data header, which provides a mechanism to programmatically clear data stored by a website on the client-side. This can include:

- Cookies: Session and persistent cookies.

- Storage: Local storage, session storage, IndexedDB, and other client-side storage mechanisms.

- Cache: HTTP cache, including cached pages and resources. The Accept-CH cache is also subject to the policies set by this header.

The Accept-CH frame is a mechanism designed to optimize the delivery of Client Hints in HTTP/2 and HTTP/3 by leveraging the transport layer as a way to reduce the performance overhead of the multiple request-responses needed to call client hints. It is related to the Accept-CH HTTP header but operates at a different level to improve efficiency and reduce latency.

The transport layer is Layer 4 of the Open Systems Interconnection (OSI) model. OSI is a fundamental computing standard that provides for how computing devices communicate across networks that was released in 1984 (so 10 years before the Internet existed) and was fundamental for communication using old tech like dial-up modems. Explaining it is beyond the scope of this blog, but if you are interested there is a good introduction here.

We will discuss the Accept-CH frame at length in the next section, but for now just know that a "frame" refers to the smallest unit of communication in the transport layer. Frames are used to encapsulate different types of data, such as headers, data, and control information, and are transmitted over a single stream within a connection. Each frame type has a specific structure and purpose, allowing for efficient multiplexing of streams over a single connection.

The Basic Mechanics of Client Hints Infrastructure

The Basic Flows

Figure 3 shows how the client and server interface for client hints. As the diagram shows, there are five steps:

- First the TLS handshake between the browser and server occurs. (For those who really want to delve into how this works, the Chrome University videos are an excellent source to learn from).

- The Client sends a request header containing the default user agent elements that do not require Client Hints.

- The server responds with an Accept-CH header requesting the high-entropy values it needs to optimize the user experience.

- The browser now resends its original request but this time includes whatever of the requested values its permissions allow it to share.

- The server reads the specific values and then returns the page content to the user agent that is optimized for that specific set of values. At the same time it repeats the Accept-CH response header to indicate to the browser that it will want the same fields on the next request.

Figure 3- The Basic Mechanics of Client Hints Infrastructure

A Behind the Scenes Look

You can actually view this interaction for any website you visit in the Google Developer Console. Figure 4 shows what CNN uses. In this case it makes no Client Hints request and only receives back the default low-entropy settings (highlighted in yellow).

Figure 4 - CNN Client Hints Usage

Google, on the other hand, makes a large number of Clients Hints requests and gets them back (Figure 5).

Figure 5 - Google’s Client Hints Requests

While I am a big fan of the Sandbox and Google’s technology, I do find it interesting that the company that produces my browser (and thus sets the default of what can be shared) makes a call for so much information that its browser makes available to me. This could just be that Google knows the tech and thus implements it as it should be used. And perhaps this amount of information is not enough to fingerprint my browser uniquely (I’d have to do the detailed calculations and don’t have time right now). But it certainly causes me some concern as a consumer as to just how much Google is asking to know about my user agent.

Who Controls What Can Be Shared?

Which brings us to the question of who controls what gets shared from the user agent? Google sets the default, but there are settings in the browser that the user can set to prevent the sharing of certain client hints. I have not been able to find a discussion of how those work, so what I did to test it is shut off all tracking using the Data and Privacy settings in Chrome and then restarted my browser. I didn’t see anything change. And even with these settings Google was getting back a number of high-entropy signals (Figure 6):

Figure 6 - Google Client Hints Returned with All Tracking Settings in Chrome Turned Off

This is an area I wish Google would provide more documentation for so business people in the industry can understand better how much control the user has on what high-entropy client hints are shared.

Client Hint Infrastructure at the Transport Layer

As we noted in Figure 3, there is a five-step process for sharing client hints. The extra HTTP calls needed to support client hints can add significant overhead to page rendering. There is a workaround for this using something called Application Layer Protocol Negotiation. Application-Layer Protocol Negotiation (ALPN) is a Transport Layer Security (TLS) extension that allows the application layer to negotiate which protocol should be performed over a secure connection in a manner that avoids additional round trips and which is independent of the application-layer protocols. It is used to establish HTTP/2 connections without additional round trips. It can be used to implement a more efficient implementation of Client Hints Infrastructure, as shown in Figure 7. In this case, the Accept-CH response header is embedded in the TLS handshake, thus saving two steps in the process. Note that the Critical-CH header is no longer needed in this call, since there is no first step where a default set of values is sent to the server. TLS embeds the response header, not the initial request.

Figure 7 - The Transport Layer Mechanics of Client Hints

That’s a lot of information for one day, so I’ll stop here. Next up: Browser Permissions.

Browser Fingerprinting & Client Hints

We’re Back

So, I am finally back at it after several weeks away from writing. My absence has partly been due to work and family obligations. But mainly it is due to the major announcement Google made on July 22 about the fact it is deferring the deprecation of third-party advertising cookies from Chrome and instead implementing a consumer-choice mechanic.

Despite this change, Google said in multiple forums which I attended that the work on the Privacy Sandbox would continue unabated, but given such a major change I wanted to wait and let the dust settle before I jumped back into the fray. For the moment, things look to be stable without any further major shifts in the offing. So I will pick up where I left off.

Continuing the discussion in the prior post on headers brings us to a discussion of browser fingerprinting and some new browser header elements designed to reduce the ability of companies to fingerprint a user agent. These elements come under the heading of Client Hints Infrastructure or a subset known as User Agent Client Hints.

In order to talk about Client Hints, I first need to introduce the concept of fingerprinting - what it is and how it works. Then we’ll discuss guidance from the W3C on a framework to reduce the ability to fingerprint. This also involves providing an introduction to some basic concepts of differential privacy. At that point, we’ll then discuss the Client Hints mechanism and how it attempts to accomplish the goals laid out in W3C’s framework. I will discuss the first three items in this post. In the next post I will then explore how the technologists have worked to reduce the ability to fingerprint using multiple methods, including Client Hints Infrastructure and User Agent Client Hints.

What Is Fingerprinting

Fingerprinting is a set of techniques for identifying a user agent from characteristics of the browser or the device on which it runs. Some of these techniques are deterministic - for example by reading the user agent header - but many are derived using statistical learning. I am particularly familiar with fingerprinting as I built algorithms to do this work in 2012 in my first role in ad tech. At that time fingerprinting was fairly new. Peter Eckersley of the Electronic Frontier Foundation had published one of the earliest papers on a variant known as browser fingerprinting, “How Unique is Your Web Browser”, in 2010. In that paper, Eckersley found that five characteristics of browsers - browser plugins, system fonts, User-Agent string (UA), HTTP Accept-headers and screen dimension - allowed his team to identify a browser uniquely ~ 84% of the time. Note that this didn’t even take IP Address into account.

At the same time, Eckersley built a web-based tool called Panopticlick to test browser uniqueness. That tool still exists today at www.coveryourtracks.eff.org. A separate tool, called AmIUnique is also available. To give you a sense of how powerful browser fingerprinting is today, I put my Chrome browser (in which I am currently writing this) through AmIUnique as the report from AmIUnique is a bit easier to comprehend. Even though I have multiple layers of protection from online tracking, AmIUnique could uniquely identify my browser (a partial printout is shown Figure 1. The full analysis is shown as an appendix at the end of this article). In fact, it could use my browser protection elements, such as my do not track settings or my Ghostery plugin, as part of the fingerprint.,

Figure 1 - Partial Printout for My Browser from AmIUnique.org

Since Eckersley published his research, there has been a large body of further work that identifies and tests browser/device features to determine the most impactful. One especially robust study, which tracked 2,315 participants on a weekly basis for 3 years, examined over 300 browser and device features. However, most fingerprinting techniques rely on somewhere between 10 - 20 features. These are shown in the top half of the table in Figure 2.

Figure 2 - Main Categories of Browser and Device Features Used for Browser Fingerprinting

Mobile devices have other features that can be fingerprinted. These include the compass, accelerometer readouts, gyroscope readouts, and barometer readouts. I won’t cover these in any detail here as right now they are tertiary signals. Only 1-2 companies actually use these features in any way to fingerprint mobile devices. But I mention them here for completeness and to call out the fact that mobile fingerprinting uses slightly slightly different methods to accomplish device (vs. browser) fingerprinting.

Some of these features are easily available in the contents of web requests. An example is the user agent header. Using just these features for creating a fingerprint is called passive fingerprinting. However, most fingerprinting is active, which means it depends on JavaScript or other code running in the local user agent to observe additional characteristics.

There is a third form of fingerprinting - called cookie-like fingerprinting. Cookie-like fingerprinting involves techniques that circumvent the end user’s attempts to clear cookies. Evercookie, invented by Samy Kamkar in 2010, is an example of this. Evercookie is a JavaScript application programming interface (API) that identifies and reproduces intentionally deleted cookies on the clients' browser storage. Evercookie effectively hides duplicate copies of cookies and critical identifying information in storage locations on the browser - such as IndexedDB or in web history storage - so that when a user agent logs back in that information can be queried and retrieved, even if cookies have been deleted.

Why the W3C Cares About Fingerprinting

While I am focused on explaining Chrome’s approach to privacy in the Privacy Sandbox, browser fingerprinting is a broad issue that all browser manufacturer’s care about. The Worldwide Web Consortium (known by its shorthand name - the “W3C”), has published a document entitled “Mitigating Browser Fingerprinting in Web Specifications” that provides guidance to the various working groups developing web specifications. The point of the guidance is to ensure that each working group considers the fingerprinting “surface” its specification creates and works to minimize it.

The W3C leadership has been concerned about fingerprinting for quite some time. But it has become especially concerned about fingerprinting as cookies or other obvious forms of cross-site tracking are deprecated. This is because statistical methods of fingerprinting will become the de facto workaround as other methods are restricted. It doesn’t pay to close the front door when the back door is wide-open. So browser manufacturers, including Google, are enhancing the privacy features of their browsers to reduce the ability to fingerprint even as they are removing obvious cross-site tracking mechanisms like cookies.

Which brings us to the new Client Hints and User Agent Client Hints APIs as one technology to reduce the ability to fingerprint a browser. As part of this discussion, we are going to have to delve into the topic of entropy, which comes from information theory developed by Claud Shannon in 1948. This will serve as an introduction to a very mathematical topic that will become exceedingly critical later in our discussions about privacy budgets and the Attribution Reporting API. But for now a high-level summary will suffice.

What are Client Hints and User Client Hints APIs?

Client Hints Infrastructure is a specification that identifies a series of browser and device features and allows access to the information about them to be controlled by the user agent in a privacy-preserving manner. It uses several techniques to accomplish this:

- It allows each browser manufacturer to establish a “baseline” set of user agent features that can be easily available for any website to request for the purposes of serving content.

- It also identifies a set of “critical” features that a website can request in order to serve a web page correctly. These features are not easily available because they provide a large amount of information value - known as entropy - that can be used to fingerprint a user agent. Examples of this are the exact operating system version on the device and the physical device model.

- It provides for the ability of the browser manufacturer to give some control of these settings to the end user in a consumer-friendly fashion.

- It establishes a structured mechanic for content negotiation of these elements between the user agent and a web server.

- It allows for information sharing only between the user agent and the primary web server (the top-level domain). Third-parties whose content is on a web page cannot gain access to this information without express permission from the primary website.

- All accesses related to features subject to control by client hints must be deleted whenever the user deletes their cookies or the session ends.

There are several types of client hints, each of which are handled differently:

- UA client hints contain information about the user agent which might once have been found expected in the user-agent header. There is a separate specification for these features appropriately named the User Agent Client Hints Specification. The User Agent Client Hints Specification extends Client Hints to provide a way of exposing browser and platform information via User-Agent response and request headers, and a JavaScript API.

- Device client hints contain dynamic information about the configuration of the device on which the browser is running.

- Network client hints contain dynamic information about the browser's network connection.

- User Preference Media Features client hints contain information about the user agent's preferences as represented in CSS media features.

As we will discuss later, these hints are requested using a new header called an Accept-CH header, and each data element that is communicated in a request/response interaction is identified by a Sec-CH-UA (I assume the abbreviation is short for “secure client hints user agent”).

To get to that point, we need to go step-by-step through three topics. First we will take a walk down memory lane and review the history of browsers and the information they share. Next we will begin the discussion of entropy. Then we will go through and show some of the simple things that browser manufacturers did even before the Client Hints Specification to limit fingerprinting from the user agent header.

The History of the User Agent Header

User agent strings date back to the beginning of the Worldwide Web. Mosaic was the first truly widely-adopted browser. It was released in 1993 and it had a very simple user-agent string: NCSA_Mosaic/1.0, which consisted of the product name and its version number.

The original purpose of the user-agent string was to allow for analytics and debugging of issues within the browser implementation. At that time, the W3C recommended it be included in all http requests. Thus, openly including the user-agent header became the normal practice.

But as the web evolved, so did the user agent string. Browsers, the devices they ran on, and the operating systems they supported multiplied. Many major and minor versions of all three platforms (browser, device, OS) were in use at the same time. The combinations became extensive, and it became difficult for web developers to to have their code run correctly on the various combinations. Thus the user agent header added more information so that the web server would know what combination it was serving to and adapt the code to ensure a web page rendered properly on that combination of platforms. Before Client Hints and User Agent Client Hints, a user agent header looked something like this (I will show what this looks like after User Agent Client Hints in the next post):

Mozilla/5.0 (Linux; Android <span style={{color: '#016F01' }}>13; Pixel 7</span>) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/95.0.0.0 Mobile Safari/537.36

As you can see contains a lot of information passed in the clear and available to any website automatically with a call to the user agent. Mind you, it looks like a lot of gobbledygook, and how it became this way is a story in-and-of itself (for those interested, a very humorous take on the evolution can be found in Aaron Anderson's post "History of the browser user-agent string"). But the key point is that the user agent header evolved to create a better user experience. No one was really thinking about the privacy implications at the time. So no one thought twice about openly sharing that information.

But then came commercialization and advertising, which has consistently followed every new medium since the mid-1800s like bees to nectar. The unique part of this new medium was that its effectiveness could be measured in detail. Slowly but surely, advertisers and publishers got more sophisticated in their ability to know who they were advertising to in order to maximize their now understandable metrics like conversion rate and return on ad spend. They discovered that the very public information in the user-agent header, when combined with other signals, allowed them to easily identify a specific viewer.

These techniques, of which fingerprinting was only one, created significant privacy concerns among regulators and consumers. Consumers especially did not like that they kept seeing the same ads over-and-over on every site they visited, which occurred before good frequency capping tools existed. They felt stalked and surveilled, which ultimately resulted in privacy regulations like GDPR.

Equally important, the values of platform owners in the industry, especially Apple and Mozilla (which evolved from Mosaic) began to change. After all, their executives were consumers as well who experienced tracking. Plus they had to worry about regulators imposing increasingly restrictive regulations and penalties for failure to follow those regulations. Like any new behavior, at first this change was due to these mandates, but ultimately they became a reflex, and now almost a religion. And where one browser developer went, others followed due to the standards-based approach to web technologies that occurs through the W3C.

The annual W3C meetings are a place where the key technical owners of browsers (and in the case of Apple and Google, operating systems and devices) come together and share ideas. These are some of the brightest and most opinionated minds on the planet, and the discussions between them can be wide-ranging, brilliantly insightful, and intense. It was in these meetings and very specific working groups, that the privacy-first mantra first emerged and then became the undisputed correct approach. Apple started it with the creation of their ID for Advertising (IDFA), which was the first control with a mandatory opt-out default. Ultimately, their viewpoint came to be accepted across the board. Since then, a huge amount of work has been done across multiple working groups to ensure that the consumer has a privacy-first experience of the web. Much of the technology I discuss in theprivacysandbox.com emerged from this work.

And while cookie deprecation and visible user controls for opting out of cookies in Safari and Firefox were some of the earliest (and easiest) results of this work, masking information in the user-agent header wasn’t far behind because its very public sharing of identifying information was an obvious privacy vulnerability.

For this, the industry turned to something called information theory and its notion of entropy to solve the problem.

Introduction to Information Theory

Information Theory was a completely new field of endeavor created almost whole cloth by Claude Shannon while working at Bell Labs in 1948. Shannon had the insight that you could measure the amount of information in any communication. Today we take the concept of “signal-to-noise ratio” - an indication of the quantity of information in a transmission - for granted. But in 1948 the concept that you could measure information was unheard of.

The intuition behind quantifying information is that unlikely events, which are “surprising”, contain more information than high probability events which are not surprising. Rare events are more uncertain and thus require more information to represent them than common events. Alternately - and what is important for us in the privacy domain - is that rare events provide more information than common events.

Let’s take an example that impacts the user-agent header and which was actually implemented. This is the current breakdown of Windows OS versions in the market (Figure 3):

Figure 3 - Market Share of Windows Versions as of September, 2024 (Source: WIkipedia)

So if there are 250 million Windows PCs that access the Internet today and if the user agent says that I am dealing with a Windows 11 device, I know that I am dealing with a 1 in 84 million chance of identifying an individual user agent. Not great for targeting an ad. But if I see a machine running Windows XP, that gives me a 1 in 850,000 chance of identifying an individual user agent. That is a less likely event, and as such has much higher information content.

But now let’s look at the percentage of Windows 11 minor releases (figure 4):

Figure 4 - Windows 11 Versions (as a percentage of all Windows 11 Machines)

If I see a Windows machine with a 24H2 minor release, then my ability to identify an individual user agent is 1 in 1 million. That is much better than just knowing the major version, and contains more information, but still less than the “surprise” I get finding that there is still a Windows XP machine out there.

I will not go into the mathematical logic here, but it is important to understand for purposes of this discussion that the level of information decreases in a non-linear fashion (Figure 5).

Figure 5 - The Probability vs.Information Curve

What the chart shows is that the level of surprise drops more rapidly than linear as you move from low probability to high probability events. This means low probability events provide incrementally more information for “unit of increase in likelihood” than a linear curve. Or put another way, removing a low likelihood predictor from a predicting equation means that you can remove a lot more information. As you will see, this last statement is why we care so much about low probability vs. high probability events as we attempt to limit information loss via Client Hints Infrastructure.

Here is the second important point and it gets to a definition of what is called entropy. Note that the chart represents the tradeoff between probability and information for a single variable. But the user-agent contains seven critical pieces of information (variables) that allow for identification of a specific user agent. We need to know how much total information is contained in this complete set of features. We can then identify which features are high information versus low information and alter those with high information since this will have the most impact on identifiability of a specific user agent.

This is where the concept of entropy comes into play. Let’s say we have a specific user agent, X, we want to identify. The way we do that is to look at all the available elements in the user agent and the information they contain and determine the probability that that combination of element values (e.g. Android, Version 14.5, Chrome, Release 127.1.1.5, mobile device, manufacturer= Google, model = Pixel, model version 7) is an exact match to device X. In other words, if we put this into an equation where f(x) is the predictive mathematical function and p(y) represents “the probability that y has a certain value" then we can write this general equation as follows:

f(x) = p(OS) + p(OS version) + p(Browser) + p(Browser version) + p(device type) + p(manufacturer)+ p(model) + p(model version)

The units of f(x) are bits of information. Each p(x) contributes so many bits of information to the total. Also note that f(x) is a probability distribution. Its values will vary depending on the actual combinations of the values from the probability distributions of each p(x). The more bits of information, the higher the likelihood that we can say f(x) = X, that is we can identify the specific user agent.

The number of bits in f(x) is known as the Shannon entropy.

The Shannon entropy of a distribution is the expected amount of information in an event drawn from that distribution. It gives a lower bound on the number of bits needed on average to encode an outcome drawn from a distribution P.

The intuition for entropy is that it is the average number of bits required to represent or transmit an event (r.g. Identify a specific user agent) drawn from the probability distribution f(x) for the random variable X.

If a combination of p(x)’s yields an f(x) that has 30 bits of information for an accurate prediction of a single user agent’s identity, then our job as privacy experts is to alter or remove those p(x)’s so that as little information as possible is provided to make that identity. The fewer bits allowed in the actual calculation relative to the 30 bits, the lower our granularity in our ability to make a 1-to-1 match. For example if 30 bits = 1 device, 20 bits might only resolve to 10,000 devices, and 10 bits might only resolve to 2,350,000 devices (recall the curve is non-linear).

We will stop here for today as I just poured a huge number of bits of information (ok, I’m not above a bad pun) into your brain. We’ll pick up next on how privacy experts have gone about using these concepts to ensure the privacy of user agents.

Appendix: Full AmIUnique Printout for My Browser

Shown below is the full printout of the AmIUnique analysis shown partially in Figure 1. This should give you a good sense of just how much information is available to fingerprint your device.

Headers and Google Privacy Sandbox: An Overview

Introduction

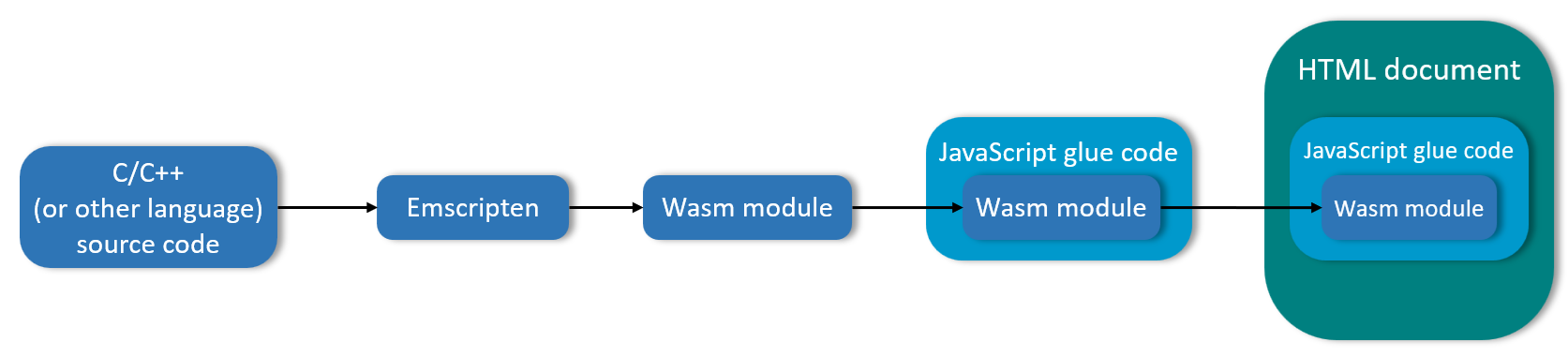

We now move into the last two topics before we leave the browser side of the Privacy Sandbox behind: HTTP headers and browser permissions. We already did a quick review of HTTP headers in the post The Big Picture and Core Browser Elements. In this post we will delve a bit further, although not to a complete review of all standard HTTP headers, which represent a numerous coterie beyond the scope of this post and not really needed to understand the Sandbox. In the next post, we will talk about another unique element of the Google Privacy Sandbox: the User Agent Client Hints API. User Agent Client Hints is based on, and is a separate specification from, the more general Client Hints API.

User Agent Client Hints is collection of HTTP and user-agent features that enables privacy-preserving, proactive content negotiation between a browser and a server. They allow the browser to control what information can be shared and with what sites via an explicit cross-origin delegation mechanic. Once again, in case you have forgotten this by now (is that even possible?), one critical design feature of the Google Privacy Sandbox is to avoid cross-site reidentification of a user or user agent ID. User Agent Client Hints prevents various forms of browser fingerprinting which could be used to do such cross-site reidentification.

What are HTTP Headers

HTTP headers are an integral part of the HTTP protocol as it exists today. Headers are used to send essential information to and from the user agent to allow the server-side and browser to communicate effectively. Headers like the user agent header allow the server to send back the right configuration to render the web document correctly on a particular device/browser combination. Others describe the server from which the request came. Others allow or prevent cross-origin resource sharing. Others handle security to mitigate potential security risks like cross-site scripting attacks or clickjacking, among many other vulnerabilities. Those are just a few examples of the range of functions that headers provide in the back and forth between user agent and server-side. Most importantly from the perspective of the Sandbox, standards groups can define custom HTTP headers unique to their protocol that can use support applications they wish to deploy in the browser.

In many cases, custom HTTP headers like those used in the Google Privacy Sandbox are developed in conjunction with a parallel ability to perform some function using JavaScript. The reason for this is that JavaScript functions can slow web page response and rendering times. Many publishers do not like cluttering their pages with JavaScript tags. In fact, if you look at adTech history, one of the main reasons that Supply-Side Providers (SSPs) emerged early on was because publishers didn’t want to add JavaScript tags to their website from every advertiser or demand-side provider (DSP) they interacted with. SSPs required only one tag on the publisher’s website to handle any and all ad requests. Moreover, performance of the code itself becomes critical when you are dealing with an application requiring less than 100ms response times. Headers are often an alternate approach that provides higher performance.

As an example of the conversations that go on around this issue, here is an excerpt of July 3 meeting notes of the Protected Audiences API Working Group about creating HTTP headers to replace core JavaScript calls in the Protected Audiences API:

[Yao Xiao] Basically what happens today - the way tagging works - on the advertiser side we inject the iFrame and the server side returns a second response… But there are performance issues around iFrames and, equally, we have to make sure the tag supports the joinAdInterestGroup() API, which is a JavaScript API. But there are companies/users that don’t want to support a JavaScript API, they want a header-based solution instead. We have already done something like this for attribution reporting API and shared storage API. If we are going to move to the header-based approach above, we want to provide header-based support for all three endpoints - joinAdInterestGroup, leaveAdInterestGroup(),.....

[Isaac Foster] Ability to create interest groups via header - doing the light shell with refresh would be highly valued. Publishers are always hesitant to add JavaScript to their page.

How HTTP Headers are Structured

HTTP headers consist of two parts. The first part is the key. The second part is the information/value to be communicated. The key and the value in the key/value pair are separated by a colon. If there is more than one value to a key, the values are separated by a semicolon. Here is a simple example:

Content-Type: text/html; charset=UTF-8

Content-Type: multipart/form-data; boundary=sample

The sender includes these headers as part of the header section of the HTTP message.

Types of HTTP Headers

Figure 1 is a table showing the types of HTTP headers, what they are used for (generally), the restrictions on them, and examples of both standard headers and calls created to support the Google Privacy Sandbox. I am not going to drill further into the different header calls as, again, it isn’t necessary to understand the implications for the Sandbox. I will probably write a tech brief later to go through all headers by category so readers will have that as a resource. The main thing is to understand the example - how the Sandbox has created a variant of a type of header for a specific purpose of supporting its functionality.

Figure 1 - Types of Headers, Their Properties, and Examples

How Headers Work: A Generic Example

Given that we have talked a great deal about browser storage, it would be natural to ask “Where are headers stored in the browser?” In Figure 1, there is a mention of a limit on the length for a single header in Chrome of 4,096 bytes, and a total storage of 250Kb across all headers from all websites and web pages. That is not a great deal of space to provide in the browser, especially if like me you keep over 100 tabs open concurrently. While there's no theoretical limit on the number of headers you could fit within the total size limit (1,000 headers of 250 bytes each is technically possible), it's highly impractical. Most websites use a reasonable number of headers (typically less than 50). Exceeding that could lead to performance issues and compatibility problems.

So if that is the case, how do headers actually work? Are they stored, if so and for how long?

What I will do in this section is first talk about the generic mechanism for how to think about header processing and then I will give an example around a specific header.

Figure 2 shows the generic flow for a header request-response cycle. The small black-and-white boxes with stubs represent the RAM for the device to which they are attached.

Figure 2: A Generic Request/Response Header Flow

Step 1: When the user agent makes a call to a website, in this case www.example.com, the user agent builds the request headers in the client’s memory based on the URL, cookies, and other relevant information.

Step 2: The user agent sends the request headers along with the request data to the server.

Step 3: The server receives the request and stores it in memory for processing.

Step 4: The server prepares the response headers and the payload for the user agent.

Step 5: The server sends the response header along with the appropriate payload to the user agent.

Step 6: The server deletes the request and its response headers from the server memory

Step 7: Upon receiving the response, the user agent stores the response headers in client memory for processing.

Step 8: The user agent uses the response headers to understand the content type, status code, and other crucial details and the payload displayed or used by the web page as needed.

Step 9: Once the request-response cycle completes, the user agent discards the headers from memory to free up resources.

Whatever we do with headers in terms of taking in meta-information that is then used to process and return data to a client, in most cases storage is not an issue. Headers are not stored on the client but rather held in memory, and then only until processing of the headers is completed. At that point the header is discarded, making room for subsequent requests and responses.

This does not mean that data used in HTTP headers isn’t stored on either the user agent or the server side. The Set-Cookie header is a good example of this. The call below is a response header that causes the user agent to store a cookie in the Cookies SQLite file on the user agent’s local machine.

Set-Cookie: sessionId=abc123; Expires=Wed, 21 Oct 2024 07:28:00 GMT; Path=/

Headers like Cache-Control, Expires, and ETag are used to control caching behavior. These headers can lead to the storage of responses in the browser cache or intermediary caches.

- Cache-Control: This header can specify directives for caching mechanisms in both requests and responses. For example, Cache-Control: max-age=3600 indicates that the response can be cached for 3600 seconds.

Cache-Control: max-age=3600

- Expires: This header provides an absolute date/time after which the response is considered stale.

Expires: Wed, 21 Oct 2024 07:28:00 GMT

- ETag: This header is used for cache validation. It allows the server to identify if the cached version of a resource matches the current version.

ETag: "686897696a7c876b7e"

There are numerous other headers that cause data to be stored in cache or on the local client. These are just a few examples to give you a sense of the range of ways a header can use or store data locally before it is deleted from client or server memory.

How Headers Work: The Content-Type Header

Now let’s drill into a specific example of how headers are processed. We will use a very common response header - the content-type header, as an example (Figure 3). The Content-Type header specifies the original media type of a resource before content encoding. It ensures proper interpretation by the client and helps reduce the likelihood of a cross-site scripting attack.

Figure 3 - Request and Response for the Content-Type Header

The right hand side of Figure 3 shows a server with a resource - in this case a document - that is stored in multiple languages (English, French, Spanish), with multiple formats (html or pdf), with multiple potential encodings (gzip, br, compress). Encodings are compression algorithms used to reduce the amount of data that needs to be transferred over the network. As the diagram shows, there are three versions of the content: a URL for English (URL/en), for French (URL/fr), and a URL for Spanish (URL/sp).

On the left hand side of the diagram is the client that wants to retrieve the English version of the pdf for download. That information is sent in the request header to the server letting it know which variant of content-type it needs, the desired language of the content, and the types of content encoding that the user agent can process.

The server finds the correct content type in the correct language and sends it back using br content encoding along with a header that indicates what it has sent back (pdf in English, encoded using br) . Each line item in the response is a single response header, with the Content-Type header indicating it is returning a pdf. After completing the send, it deletes the original request and the response headers from memory.

When the browser receives that response along with the response header, it uses the information in the response header to use the correct decompression algorithm and then display the English version of the pdf in a browser-based pdf viewer. Once the page is displayed, the user agent deletes the response header.

User Agent Header Is In a Class of Its Own for Privacy

You may have noticed in the first row in Figure 1 there is a user agent header example. This is because the user agent header from a technical perspective is just another request header. That is its header type. But it is in its own type when it comes to privacy. This is because the user agent header has been used by data scientists, along with other information like IP Address, plug-ins, installed fonts, and screen resolution to statistically “fingerprint” a browser as another way of tracking. As a result, the user agent header is in a special class of its own and we will cover it in extensive detail in the next post.

Next Stop: Fingerprinting

The user agent header is not the only mechanism by which devices can be fingerprinted, So in the next post, we will start with an overview of fingerprinting and the various mechanics used. Then we will explore two new, interrelated standards that have evolved in the Privacy Sandbox to help reduce “the exposure surface” for fingerprinting. They are the Client Hints API and the User Agent Client Hints API.

Private State Tokens

Introduction

So we have finished with storage, per se. But there is one last topic to discuss that is “indirectly” related to storage - Private State Tokens. Private State Tokens are a new mechanism that is part of the Google Privacy Sandbox. They are designed to help prevent fraud and abuse on the web while preserving user privacy.

Private state tokens are a completely invisible, private way to validate that real users are visiting a web site. They allow one website or mobile app (a user agent) to validate in a privacy-compliant way that a particular user agent represents a real viewer, not a bot or other fraudulent entity. Once validated, the user agent stores the tokens so they can be used by the same or other websites or mobile applications to quickly validate the reality of the end user, rather than having to perform a completely new validation. This validation lasts as long as the lifetime of the tokens, which can be set by each website or application developer based on the particular needs of their business.

Private State tokens are intended to supplement, or replace, other validation mechanics such as a CAPTCHA or a request for PII. They are also designed to convey trust signals while ensuring that user reidentification cannot occur through issuance of the tokens themselves. As such, they are a critical part of the Privacy Sandbox.

The reason private state tokens are related indirectly to storage is that they actually have their own unique storage area on the user’s hard drive in Chrome. Moreover, they are not physically an integral part of the browser itself - not a browser ‘element’ per se. So I grouped them in the module on storage in the browser elements image. Similarly to CHIPS, however, private state tokens are their own privacy-preserving mechanic and a specific, unique topic that needs to be covered in their own right.

Private state tokens are part of a broader protocol called the Privacy Pass API. Apple has already implemented a similar technology in 2022 called Private Access Tokens, also based on Privacy Pass. I hope to discuss the Privacy Pass API, as well as the differences between Apple’s and Google’s implementation of the technology, in a future post. It is a bridge too far today given the length that this post will end up being.

Because the audience for www.theprivacysandbox.com is ad tech professionals, I am going to assume that you generally understand the concept of tokens. We discussed them a bit in the post on cookies. But if you are not familiar with tokens and how they are used in computing, here is a good introduction.

What Are Private State Tokens?

Tokens are a technical concept in computing which packages some information in a self-contained format that can be read by other computer programs. A cookie is one example of a type of token, but tokens can take numerous formats. Private state tokens are designed to enable trust in a user’s authenticity without allowing tracking. Their unique features include:

- They are encrypted. Private state tokens are encrypted in a way that makes them unique and unable to be identified as a specific user or user agent. All anyone can know is whether or not this particular requester is verified as a real person.

- They can be shared between websites without breaching user privacy. Private state tokens were designed to allow one website or app to validate that a user is “real” and place a series of private state tokens confirming that in the user’s browser or app. Later a second website can use that act of validation, contained in those tokens, to verify the user agent represents a real person without having to do their own validations and token issuance procedure.

- They are stored locally in the browser.

- They require one or more trusted issuers. Tokens are issued by trusted third parties that provide the tokens to websites. There can be as many of these as the market has room for. As of this writing there are five: hCaptcha, Polyset, Captchafox, Sec4u(authfy), Amazon, and Clearsale. A trusted issuer is likely to be a PKI certificate authority of some kind, although nothing in the specification requires that.

- They are redeemable. The act of checking that a user has a valid token is called a redemption. A token is sent from the browser to the token issuer who then verifies (redeems) the token and provides a confirmation of identity back to the website. This confirmation is in the form of a redemption record. This process occurs without the issuer being able to know anything about the identity of the user agent.

- Trusted issuers must be verified by the website requesting a redemption. The website that needs to verify the “realness” of a user must already have a relationship with a trusted issuer or must use what is known as a a key commitment service to validate the issuer. Otherwise, they have no way to trust the company redeeming the token.